Meta to ban “HATE SPEECH” during 3 major sporting events

06/18/2024 / By Laura Harris

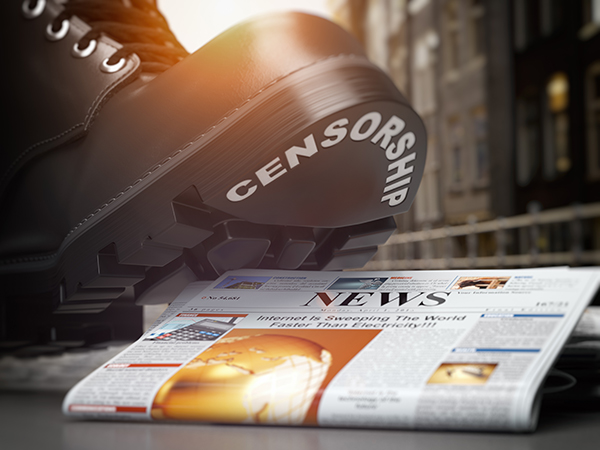

Mark Zuckerberg’s Meta Platforms is censoring all alleged “abusive behavior, bullying and hate speech” during three major sports events.

The Big Tech giant announced in a June 11 blog post that it will “set out multiple protections on their apps” – in particular Facebook, Instagram and Threads – ostensibly to “protect” fans and athletes” from the aforementioned behavior.

“Across the world, athletes and their fans will be using our apps to connect around these moments. Most of these interactions will be positive,” Meta wrote in the June 11 blog. “But unfortunately, it is likely there will be some individuals who will want to be abusive toward others.” (Related: International Olympic Committee prepares for AI integration in 2024 Paris Olympics.)

The tech giant’s announcement comes amid three major events in the sporting world:

- The Union of European Football Associations’ 2024 European Football Championship, which began on June 14 in Germany and is set to last a month

- The 2024 Summer Olympics in Paris with 10,500 athletes competing in 32 sports and 329 medal events, set to take place from July 26 to Aug. 11

- The 2024 Summer Paralympics also in the French capital featuring 4,400 athletes in 22 sports and 549 medal events, set to take place from Aug. 28 until Sept. 8

The blog post mentioned Facebook and Instagram implementing several features, including turning off direct message requests; controls for hidden words, restrict limits, tags and mentions; moderation assistance; and enhanced blocking features and scam account detection. These features seek to help users moderate comments and limit unwanted interactions in their accounts.

“We have clear rules against bullying, violent threats and hate speech – and we don’t tolerate it on our apps. As well as responding to reports from our community, outside of private messages we use technology to prevent, detect and remove content that might break these rules. Where a piece of content is deemed to violate our policies, our tech will either flag it to our teams for review, or delete it automatically where there is a clear violation.”

Meta taps notoriously inaccurate AI for censorship

The new features on the three apps are intended to “protect” athletes, presumably from disgruntled fans. However, Meta’s aim extends beyond protecting athletes.

Also included in the new features is a setting for athletes to automatically hide “offensive” comments. Instagram will use artificial intelligence to warn users before they post comments that could be deemed violative. The company also aims to tailor censorship specifically to protect women.

However, the reliance on notoriously error-prone artificial intelligence (AI) algorithms for detecting “abusive” or “offensive” comments and issuing warnings about potential rule violations is concerning.

Meta ominously mentioned its cooperation with law enforcement, without specifying whether this means basic legal compliance or collaboration with government entities to censor speech. The company remarked: “We cooperate with law enforcement in their investigations and respond to valid legal requests for information.”

Moreover, Meta openly bragged about its thousands of censors and the vast network lined up to stifle free speech in their blog. The Big Tech company further boasted that it had censored 95 percent of allegedly violative “hate speech” before it was even reported to the platform.

“Since 2016, we’ve invested more than $20 billion into safety and security and quadrupled the size of our global team working in this area to around 40,000 people. This includes 15,000 content reviewers who review content across Facebook, Instagram and Threads,” Meta proudly declared.

Head over to Censorship.news for similar stories.

Watch this clip from the Health Ranger Mike Adams discussing why censorship is violence against humanity.

This video is from the Health Ranger Report channel on Brighteon.com.

More related stories:

Zuckerberg’s Meta releases new censorship unit for upcoming elections.

Censorship remains a problem even after Musk’s Twitter takeover.

Sources include:

Submit a correction >>

Tagged Under:

2024 Paris Olympics, 2024 Paris Paralympics, banned, Big Tech, Censorship, current events, Facebook, Facebook collapse, fakebook, Glitch, Hate speech, Instagram, mark zuckerberg, meta, Meta Platforms, sporting events, sports, tech giants, Threads, UEFA EURO 2024

This article may contain statements that reflect the opinion of the author

RECENT NEWS & ARTICLES

COPYRIGHT © 2017 CENSORSHIP NEWS